🛎️ Nuclear Inspectors

Good Morning, AI Enthusiasts!

The future of oversight doesn’t wear a badge—it runs on silicon

NUCLEAR

Japan Wants AI Inspectors for Nuclear Plants

📌 What’s happening: Japan’s Nuclear Regulation Authority is asking for new funds to test whether AI can inspect reactors — both the aging fleet that’s limping toward decommissioning and the next-gen designs that regulators haven’t seen before. The problem is simple: too many plants, not enough inspectors, and Fukushima still burns in memory.

🧠 How this hits reality: Nuclear oversight is the definition of “no mistakes allowed.” If AI can crunch decades of plant logs, sensor feeds, and regulatory filings to flag anomalies, it could slash manpower bottlenecks and speed up reviews. But it also drags AI into one of the most regulated, failure-averse industries on earth. The incumbents — inspection firms, engineering contractors, safety consultants — now face the risk of algorithms eating their margin.

🛎️ Key takeaway: AI won’t run reactors, but if it rewrites inspection, the nuclear industry’s moat won’t be concrete — it’ll be code.

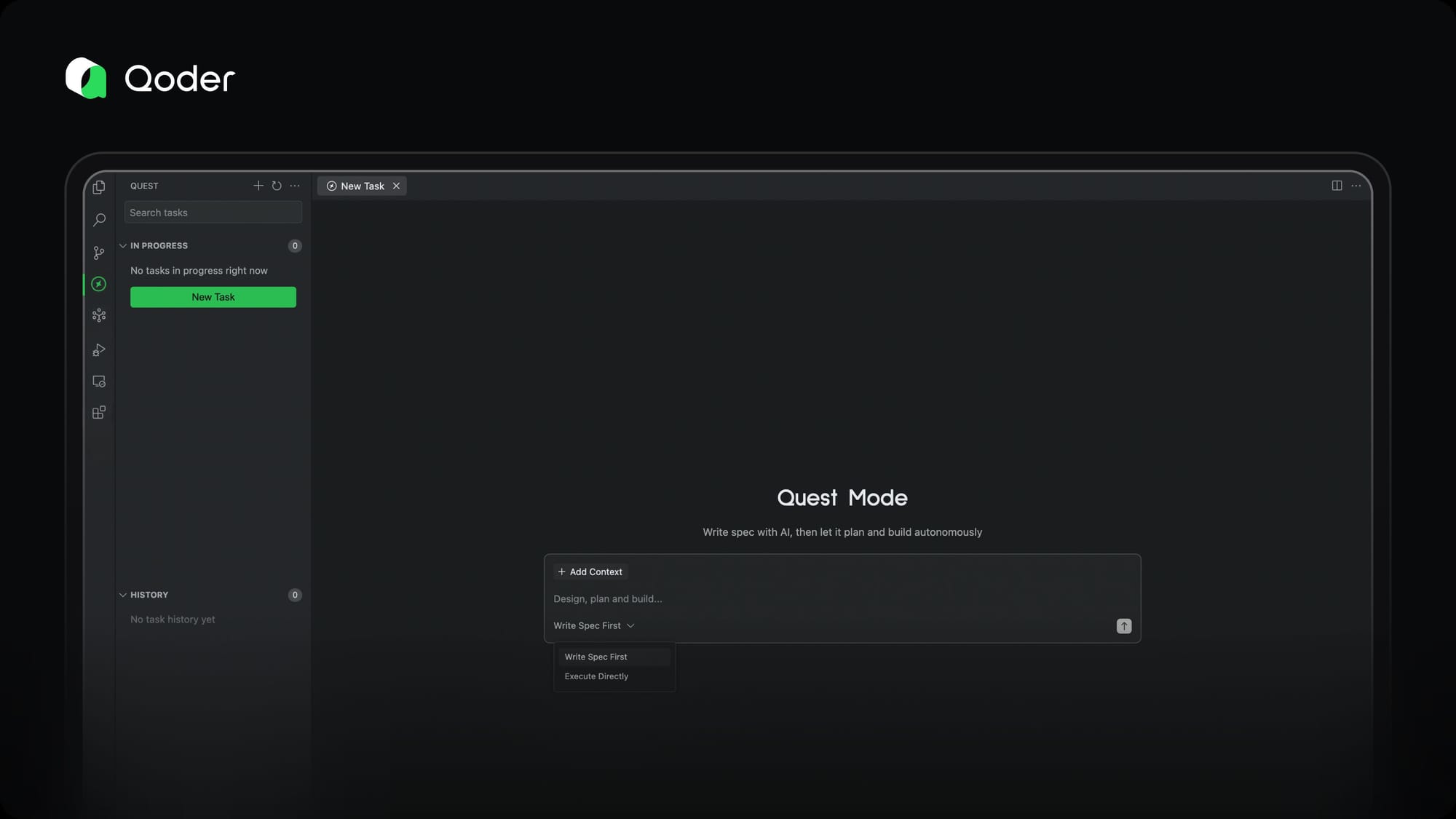

TOGETHER WITH QODER

Let Qoder Code While You Sleep

100,000 developers in just five days. That’s the momentum behind Qoder’s preview launch — and it’s no accident. Qoder isn’t another AI IDE. It’s the agentic coding platform built to deliver real software, not half-finished drafts. Drop in a spec, and Qoder Agents take it from there: planning, coding, testing, validating — all handled seamlessly in the background.

With Quest Mode, your ideas become production-ready code. No micromanaging, no back-and-forth — just describe the logic, and let Qoder run. And now, every developer gets free Claude 4 usage inside Qoder, bringing one of the world’s top LLMs directly into your workflow.

The shift is happening: coding is no longer assisted, it’s delegated. Think Deeper. Build Better.

LLM

Anthropic and OpenAI Just Audited Each Other’s Homework

📌 What’s happening: Anthropic and OpenAI quietly ran a joint “safety swap,” putting each other’s public models through alignment stress tests—sycophancy, misuse, self-preservation, whistleblowing. Both then blog-dumped the results: Anthropic admitted GPT-4/4.1 still showed concerning misuse behaviors; OpenAI said Claude 4 struggled under jailbreak pressure.

🧠 How this hits reality: This isn’t kumbaya—it’s preemptive regulatory theater. By auditing each other, the labs are trying to set the bar for “responsible” oversight before Washington or Brussels does it for them. For startups, it means the definition of “alignment best practices” may soon come from two incumbents writing their own standards. For investors, it’s a moat: compliance by cartel. And for rival labs, it’s a signal—get on board or get painted as reckless.

🛎️ Key takeaway: The foxes are building the henhouse’s inspection rules—then grading each other’s report cards.

CALL CENTER

911’s New Operator Isn’t Human

📌 What’s happening: Aurelian, once a YC hair-salon booking app, just raised $14M from NEA after pivoting into the broken heart of U.S. infrastructure: 911 call centers. Its AI voice agent already fields thousands of non-emergency calls (noise complaints, stolen wallets, parking squabbles) across a dozen cities, routing true crises to human dispatchers and logging the rest without burning out already overworked staff.

🧠 How this hits reality: Dispatch is a high-turnover, understaffed grind where 12-hour shifts are common. Aurelian’s AI doesn’t replace jobs—it absorbs the “phantom headcount” cities can’t afford to hire. That’s a structural unlock: lighter workloads, faster triage, fewer lives lost in the backlog. And because Aurelian is live—while rivals Hyper and Prepared are still piloting—it’s quietly cornering the credibility moat.

🛎️ Key takeaway: Aurelian turned a parking-lot complaint into a public safety wedge—and just automated the front desk of America’s emergency system.

QUICK HITS

- Google makes its AI video editor Vids available to everyone, while new features like AI avatars remain exclusive to paid plans.

- YouTube is quietly altering videos with AI, sparking creators’ fears over distorted content and eroded audience trust.

- A new report from Anthropic warns "vibe-hacking" has become a top threat as criminals use AI for sophisticated extortion and fraud.

- WhatsApp has launched a new AI feature that can rewrite your messages in different tones using privacy-preserving technology.

- Ex-Bain consultant’s Bluente raised $1.5M to deliver formatted translations, positioning itself as workflow infrastructure beyond a translation app.

TRENDING

Daily AI Launches

- Webvizio launched a new feature that automatically converts non-technical feedback and bug reports into development tasks ready for AI coding agents.

- Rube launched a universal connector that lets users operate over 600 external applications directly from within a single AI chat window.

- SoWork released a major update for its remote work platform, featuring comprehensive upgrades to performance, UI/UX, and its mobile apps.

- Trace launched a scheduling tool that requires no forms and can instantly convert a text message, voice, or screenshot into a calendar event.

Featured AI Tools

- 🗣️ VoiceType lets you write 9x faster with AI voice typing that works everywhere and protects privacy

- 🎓 Resea AI is the first academic agent for end to end research workflow.

- 📚 Heardly is the Fast Way to read Best Book.

- 🪶 CopyOwl is the First AI Research Agent, deep research on any topic in one click.

- 🦾 Flot AI writes, reads, and remembers across any apps and webs.

TOGETHER WITH US

AI Secret Media Group is the world’s #1 AI & Tech Newsletter Group, boasting over 1 million readers from leading companies such as OpenAI, Google, Meta, and Microsoft. Our Newsletter Brands:

- AI: AI Secret

- Tech: Bay Area Letters

- Futuristic: Posthuman

We've helped promote over 500 Tech Brands. Will yours be the next?

Email our co-founder Mark directly at mark@aisecret.us if the button fails.

Latest Daily Rundowns

More AI Stories